Still trying to decide whether an X-E1 is a worthwhile upgrade to the GH2. Clearly the video capabilities on the X-E1 are worse, but I’m really looking for the photo IQ, and here’s a pretty shocking example of what the difference could be.

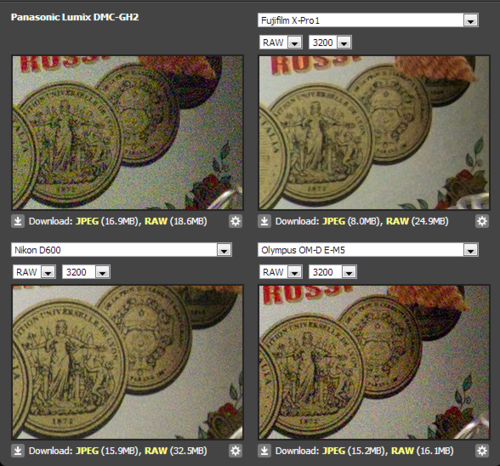

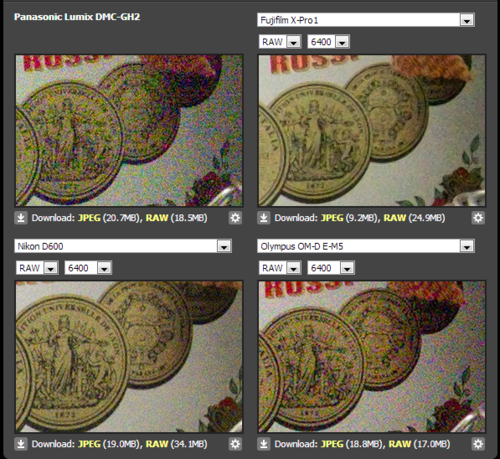

Below is a comparison of GH2, x-pro1 (which has the same sensor), the new nikon d600, and the olympus OM-D (these are all from dpreview.com). The difference between the gh2 and the x-pro1 is pretty shocking, and the similarity between the x-pro1 and the d600 is also quite shocking. Looks like d600 has a bit more res, but I’d say if you scaled it down to x-pro1 resolution, it would look about the same.

Other, supposedly high-end cameras with APS-C sensors like the Sony Nex-7 don’t even come close to this. This seems like it should be physically impossible. A different color filter pattern alone wouldn’t explain such a difference, would it?

Update: some hints about this in this interview.

DE: This may also be a question for the sensor engineers, but the X-Trans sensor did very well with high-ISO noise. Overall noise levels were low, but what was there was very fine-grained, and the noise reduction seemed to do a very good job of holding onto fine detail. Can you say anything about how the X-Trans differs from previous sensors in ways that contribute to good noise performance?

HS: Let me try to explain. As we explained earlier, the current system has no low-pass filter. This makes it easier to recognize noise vs. signal. To achieve good image quality, we optimize the signal part itself to make the image. Then when it comes to the noise, it’s much easier to recognize whether it’s noise or not. Especially for the color noise.

DE: Ah, so it’s not just that the sensor itself is more sensitive, but because of the way the sensor works, and the fact that you don’t have a low-pass filter smooshing your signal around, you can do a better job of noise reduction.

HS: Yes. The first basic concept, as I explained, is how much easier it is to recognize noise signal. And secondly, after it recognizes the noise itself, another important point is the location of the noise signal. Location, meaning that when we have a large colored area with some colored noise, that’s no problem for the noise reduction, we can eliminate the noise there. But when the system finds some color noise along edges, it’s a more sensitive situation, and we can minimize the noise reduction. By doing this, we can maintain high image quality because too much noise reduction can obscure edges and lose fine detail.